本文主要包括:

- 系统安装与配置网络

之前使用的Centos7,系统太大了,放到虚拟机里感觉都带不动,而且,也不需要图形化界面,这里就下载一下精简版

系统安装与配置网络

下载Centos7精简版,下载地址

然后使用VMware安装3台虚拟机。

但是,新安装的Centos7什么东西都没有,连最基本的ifconfig都用不了,网络也不同,所以,配置的第一步是先配置网络

配置网络

ip addr之后发现ens33这块网卡没有ip地址

cd /etc/sysconfig/network-scripts && vi ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static ##这里变了

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=cd6473a5-142f-4890-b4ba-5a711764a1d9

DEVICE=ens33

ONBOOT=yes ## 这里便也

## 一下变了

IPADDR=192.168.233.130

GATEWAY=192.168.233.2

DNS1=192.168.233.2改完之后重启网络

systemctl restart network如果还是没有网络,就是因为没有配置DNS解析服务,可以添加DNS配置

cd /etc

vi resolv.conf

添加如下的配置

-------------------------------------------------------------------------------------------

search localdomain

nameserver 8.8.8.8

nameserver 8.8.4.4再次重启网络服务

systemctl restart network配置时间相关

可以看到,时间是不对的

修改如下:

## 修改时区

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

## 安装ntp

yum -y install ntpntp主机配置 vi /etc/ntp.conf

在文件里新增server ntp.aliyun.com,并把原始的server给注释掉

重启service ntpd restart

SSH免秘钥并关闭防火墙

- SSH免秘钥

通过ssh-keygen -t rsa命令,然后一直回车

然后把id_rsa.pub内容的复制到3个节点的authorized_keys

然后每台节点都ssh一遍,一共9次scriptcd ~ && chmod 700 .ssh && chmod 600 .ssh/authorized_keys - 关闭防火墙script

systemctl stop firewalld ## 关闭防火墙 systemctl disable firewalld ###禁止防火墙开机自启 ``` ### 安装必备软件 * 通过yum安装必备软件 ```shell yum install net-tools.x86_64 ## 是为了安装ifconfig,没有这个包,是在net-tools里的 yum install vim yum install - 安装Mysql,具体参考Centos安装Mysql

- 安装Java、Scala、Maven,这个都比较简单,这里略过

安装Hadoop

- 配置hadoop-env.sh里的JAVA_HOME使用绝对路径

export JAVA_HOME=/opt/modules/jdk1.8.0_321 - 配置core-site.xml

<configuration > <property> <name>fs.default.name</name> <value>hdfs://golden-01:9000</value> <description>Specify the IP address and port number of the namenode</description> </property> </configuration> - 配置hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> <description>replications</description> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/opt/modules/hadoop-3.2.2/yarn/yarn_data/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/opt/modules/hadoop-3.2.2/yarn/yarn_data/hdfs/datanode</value> </property> </configuration> - 配置mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>golden-01:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>golden-01:19888</value> </property> </configuration> - 配置yarn-site.xml

<configuration> <!-- Site specific YARN configuration properties --> <property> <name>yarn.resourcemanager.address</name> <value>golden-01:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>golden-01:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>golden-01:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>golden-01:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>golden-01:8088</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> </configuration> - 配置workers

golden-01 golden-02 golden-03 - 将配置好的hadoop-3.2.2分发同步到各个数据节点

- 格式化NameNode

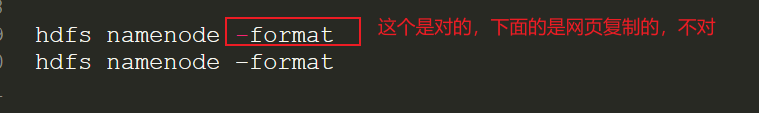

出现hdfs namenode -formatnamenode has been successfully formatted.就证明成功了

这里一开始出现一个情况,我刚启动2022-03-20 03:47:54,102 INFO common.Storage: Storage directory /opt/modules/hadoop-3.2.2/yarn/yarn_data/hdfs/namenode has been successfully formatted. 2022-03-20 03:47:54,124 INFO namenode.FSImageFormatProtobuf: Saving image file /opt/modules/hadoop-3.2.2/yarn/yarn_data/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 using no compression 2022-03-20 03:47:54,220 INFO namenode.FSImageFormatProtobuf: Image file /opt/modules/hadoop-3.2.2/yarn/yarn_data/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds . 2022-03-20 03:47:54,226 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2022-03-20 03:47:54,230 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown. 2022-03-20 03:47:54,231 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at golden-01/192.168.233.130 ************************************************************/hdfs namenode -format,就失败了,直接就报SHUTDOWN_MSG: Shutting down NameNode at golden-01/192.168.233.130

问题的原因:从网上复制过来的

hdfs namenode -format,这个-format其实是减号,是不对的

还有一种猜想,会不会是因为我用的root用户来做初始化导致的?因为,我启动集群的时候,也报错了:

Starting namenodes on [master]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [slave1]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.这里我切换到普通用户来启动了

也可以将start-dfs.sh,stop-dfs.sh两个文件顶部添加以下参数

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root还有,start-yarn.sh,stop-yarn.sh顶部也需添加以下:

#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root这个猜想不是这个问题的原因。

hadoop完全分布式部署(包含HA),可以参考hadoop高可用HA集群部署及三种方式验证

安装zookeper

复制zoo.cfg

在zkk.cfg里修改如下内容

dataDir=/opt/modules/zookeeper-3.8.0/zkData

dataLogDir=/opt/modules/zookeeper-3.8.0/zkDataLog

server.1=golden-01:2888:3888

server.2=golden-02:2888:3888

server.3=golden-03:2888:3888 分别在三台服务上面依次执行 echo id > /opt/modules/zookeeper-3.8.0/zkData/myid 命令创建zookeeper编号的myid文件

(notice:这里的myid要建在自己zoo.cfg文件的dataDir中)

zookeeper启动:zkServer.sh start

需要注意的是,需要在每台几点都启动一下,否则的话,会报如下错误:

ZooKeeper JMX enabled by default

Using config: /opt/modules/zookeeper-3.8.0/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Error contacting service. It is probably not running.通过./zkServer.sh start-foreground查看日志:

2022-03-20 08:29:37,669 [myid:] - WARN [QuorumConnectionThread-[myid=1]-1:o.a.z.s.q.QuorumCnxManager@401] - Cannot open channel to 2 at election address /192.168.233.131:3888

java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:476)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:218)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:200)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:394)

at java.net.Socket.connect(Socket.java:606)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.initiateConnection(QuorumCnxManager.java:384)

at org.apache.zookeeper.server.quorum.QuorumCnxManager$QuorumConnectionReqThread.run(QuorumCnxManager.java:458)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

2022-03-20 08:29:37,669 [myid:] - WARN [QuorumConnectionThread-[myid=1]-2:o.a.z.s.q.QuorumCnxManager@401] - Cannot open channel to 3 at election address /192.168.233.132:3888

java.net.ConnectException: Connection refused (Connection refused)

at java.net.PlainSocketImpl.socketConnect(Native Method)

at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:476)

at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:218)

at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:200)

at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:394)

at java.net.Socket.connect(Socket.java:606)

at org.apache.zookeeper.server.quorum.QuorumCnxManager.initiateConnection(QuorumCnxManager.java:384)

at org.apache.zookeeper.server.quorum.QuorumCnxManager$QuorumConnectionReqThread.run(QuorumCnxManager.java:458)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)其实就是需要在每台节点都启动一下zk服务,否则的话,端口不同

安装Kafka

编辑config/server.properties

broker.id=1

log.dirs=/opt/modules/kafka-3.1.0/kafka-logs ## 需要自己创建出来

zookeeper.connect=golden-01:2181,golden-02:2181,golden-03:2181kafka启动:

需要在每台节点都启动一下:

nohup bin/kafka-server-start.sh config/server.properties >> output.log 2>&1 &这里编写自动化脚本来实现每台节点都启动的操作

echo "############# 正在启动 golden-01 kafka服务 ###########"

ssh -Tq golden@golden-01<<EOF

source /etc/profile

cd /opt/modules/kafka-3.1.0

nohup bin/kafka-server-start.sh config/server.properties >output.log 2>&1 &

EOF

echo "############# 正在启动 golden-02 kafka服务 ###########"

ssh -Tq golden@golden-02<<EOF

source /etc/profile

cd /opt/modules/kafka-3.1.0

nohup bin/kafka-server-start.sh config/server.properties >output.log 2>&1 &

EOF

echo "############# 正在启动 golden-03 kafka服务 ###########"

ssh -Tq golden@golden-03<<EOF

source /etc/profile

cd /opt/modules/kafka-3.1.0

nohup bin/kafka-server-start.sh config/server.properties >output.log 2>&1 &

EOF这里如果不添加-Tq,就会报Pseudo-terminal will not be allocated because stdin is not a terminal

字面意思是伪终端将无法分配,因为标准输入不是终端。

所以需要增加-tt参数来强制伪终端分配,即使标准输入不是终端。

或者加上-Tq这个参数也可以。-tt会把命令打印出来,-Tq只会执行命令,不会打印具体执行了什么

可以参考kafka 集群部署

安装Flink

安装Hive

- 为Hive建立相应的MySQL账户,并赋予足够的权限,执行命令如下:

[lighthouse@VM-4-14-centos hive-2.3.9]$ mysql -uroot -pmysql mysql> CREATE USER 'hive' IDENTIFIED BY 'Hive123!@#'; mysql> GRANT ALL PRIVILEGES ON *.* TO 'hive'@'%' WITH GRANT OPTION; mysql> flush privileges; - 建立 Hive 专用的元数据库,记得创建时用刚才创建的

hive账号登陆。[lighthouse@VM-4-14-centos hive-2.3.9]$ mysql -uhive -pHive123!# mysql> create database hive; - 到官网下载hive安装包,这里下载hive-2.3.9

tar zxvf apache-hive-2.3.9-bin.tar.gz -C . mv apache-hive-2.3.9-bin/ hive-2.3.9 cd hive-2.3.9/conf && cp hive-default.xml.template hive-site.xml - 修改hive-site.xml配置

<configuration> <property> <name>hive.metastore.local</name> <value>true</value> </property> <property> <name>hive.metastore.db.type</name> <value>mysql</value> <description> Expects one of [derby, oracle, mysql, mssql, postgres]. Type of database used by the metastore. Information schema & JDBCStorageHandler depend on it. </description> </property> <property> <name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value> <description>location of default database for the warehouse</description> </property> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://golden-02:3306/hive?characterEncoding=UTF-8</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>hive</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>mysql</value> </property> <!-- metastore --> <property> <name>hive.metastore.uris</name> <value>thrift://golden-02:9083</value> </property> <!-- hiveserver2 --> <property> <name>hive.server2.thrift.port</name> <value>10000</value> </property> <property> <name>hive.server2.thrift.bind.host</name> <value>golden-02</value> </property> <property> <name>hive.server2.thrift.client.user</name> <value>gujincheng</value> <description>Username to use against thrift client</description> </property> <property> <name>hive.server2.thrift.client.password</name> <value>980071</value> <description>Password to use against thrift client</description> </property> <property> <name>hive.server2.enable.doAs</name> <value>false</value> </property> </configuration> - 去mysql官网下载jdbc-connect的jar包放到hive/lib文件夹

- 初始化hive元数据库

schematool -initSchema -dbType mysql - 启动hive

报如下错误:

这是因为hive内依赖的guava.jar和hadoop内的版本不一致造成的。Exception in thread "main" java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V at org.apache.hadoop.conf.Configuration.set(Configuration.java:1357) at org.apache.hadoop.conf.Configuration.set(Configuration.java:1338) at org.apache.hadoop.mapred.JobConf.setJar(JobConf.java:536) at org.apache.hadoop.mapred.JobConf.setJarByClass(JobConf.java:554) at org.apache.hadoop.mapred.JobConf.<init>(JobConf.java:448) at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:4051)

删除版本低的,换成其中一个的高版本的。

再次启动,报如下错误:cd /opt/modules/hadoop-3.2.2/share/hadoop/common/lib/ && cp guava-27.0-jre.jar /opt/modules/hive-2.3.9/lib/

这是因为hive-site里的路径使用了变量,但是,系统没有获取到,这里直接把变量换成绝对路径Exception in thread "main" java.lang.IllegalArgumentException: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D at org.apache.hadoop.fs.Path.initialize(Path.java:263) at org.apache.hadoop.fs.Path.<init>(Path.java:221) at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:663) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:586) at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:553) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:750) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:323) at org.apache.hadoop.util.RunJar.main(RunJar.java:236) Caused by: java.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D at java.net.URI.checkPath(URI.java:1823) at java.net.URI.<init>(URI.java:745) at org.apache.hadoop.fs.Path.initialize(Path.java:260) ... 12 more

使用vim打开hive-site.xml,使用命令:

又报如下错误::%s/${system:java.io.tmpdir}\/${system:user.name}/\/tmp\/hive\/lighthouse/g :%s/${system:java.io.tmpdir}/\/tmp\/hive/g

这是因为Hive2需要hive元数据库初始化FAILED: SemanticException org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

初始化好以后,hive可以使用schematool -dbType mysql -initSchema

开启hiveserver2和metastore报如下错误:

Exception in thread "org.apache.hadoop.hive.common.JvmPauseMonitor$Monitor@1eb9bf60" java.lang.IllegalAccessError: tried to access method com.google.common.base.Stopwatch.<init>()V from class org.apache.hadoop.hive.common.JvmPauseMonitor$Monitor

at org.apache.hadoop.hive.common.JvmPauseMonitor$Monitor.run(JvmPauseMonitor.java:176)

at java.lang.Thread.run(Thread.java:748)可以忽略这个报错,看起来像是监控报错,不影响功能

使用beeline方式连接hive,报如下错误:

beeline> !connect jdbc:hive2://golden-02:10000

Connecting to jdbc:hive2://golden-02:10000

Enter username for jdbc:hive2://golden-02:10000: gujincheng

Enter password for jdbc:hive2://golden-02:10000: ******

22/04/08 09:02:23 [main]: WARN jdbc.HiveConnection: Failed to connect to golden-02:10000

Error: Could not open client transport with JDBC Uri: jdbc:hive2://golden-02:10000: Failed to open new session: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): User: gujincheng is not allowed to impersonate gujincheng (state=08S01,code=0)这里需要设置conf/hive-site.xml:

默认情况下HiveServer2 执行查询时使用的用户是提交查询的用户.但是如果将这个选项设置为false,查询将会使用运行hiveserver2的用户

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

</property>执行hivesql报如下错误:

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask解决办法:在hadoop的yarn-site.xml里添加如下配置:

<property>

<name>yarn.application.classpath</name>

<value>/opt/modules/hadoop-3.0.0/etc/hadoop:/opt/modules/hadoop-3.0.0/share/hadoop/common/lib/*:/opt/modules/hadoop-3.0.0/share/ hadoop/common/*:/opt/modules/hadoop-3.0.0/share/hadoop/hdfs:/opt/modules/hadoop-3.0.0/share/hadoop/hdfs/lib/*:/opt/modules/hadoop-3.0.0/share/hadoop/hdfs/*:/opt/modules/hadoop-3.0.0/share/hadoop/mapreduce/*:/opt/modules/hadoop-3.0.0/share/hadoop/yarn:/opt/ modules/hadoop-3.0.0/share/hadoop/yarn/lib/*:/opt/modules/hadoop-3.0.0/share/hadoop/yarn/*</value>

</property>- 启动hiveserver2

启动后,连接不上hiveserver2,并且也不报错<!-- hiveserver2服务的端口号以及绑定的主机名--> <property> <name>hive.server2.thrift.port</name> <value>10000</value> </property> <property> <name>hive.server2.thrift.bind.host</name> <value>localhost</value> </property>

解决办法:

重启hadoop,并重启hiveserver2<!-- 在core-site.xml里添加以下内容 --> <!-- 如果连接不上10000 --> <property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.master.hosts</name> #master配置的主机名 hostname命令查看主机名 <value>*</value> </property> <property> <name>hadoop.proxyuser.master.groups</name> #master配置的主机名 <value>*</value> </property> <property> <name>hadoop.proxyuser.hive.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.hive.groups</name> <value>*</value> </property>

Hbase安装

https://www.zsjweblog.com/2020/11/25/hbase-2-3-3-ha%E6%90%AD%E5%BB%BA/

安装SQLServer

- 安装sqlserver

这里在Centos精简版一直失败,最后在Centos7完整版里安装成功了,精简版的缺少太多基础软件了。curl -o /etc/yum.repos.d/mssql-server.repo https://packages.microsoft.com/config/rhel/7/mssql-server-2019.repo yum install -y mssql-server /opt/mssql/bin/mssql-conf setup

可以参考sql server for linux 安装(yum、静默安装、环境变量安装、docker安装) - 启用SQL Server代理

#启用SQL Server代理 sudo /opt/mssql/bin/mssql-conf set sqlagent.enabled true #需要重启服务生效 sudo systemctl restart mssql-server

Centos如何访问sqlserver

安装sqlcmd

curl -o /etc/yum.repos.d/msprod.repo https://packages.microsoft.com/config/rhel/7/prod.repo yum remove unixODBC-utf16 unixODBC-utf16-devel yum install -y mssql-tools unixODBC-devel echo 'export PATH="$PATH:/opt/mssql-tools/bin"' >> ~/.bash_profile echo 'export PATH="$PATH:/opt/mssql-tools/bin"' >> ~/.bashrc source ~/.bashrc使用 SQL Server 名称 (-S),用户名 (-U) 和密码 (-P) 的参数运行 sqlcmd 。 在本教程中,用户进行本地连接,因此服务器名称为 localhost。 用户名为 SA,密码是在安装过程中为 SA 帐户提供的密码。

sqlcmd -S localhost -U SA -P '<YourPassword>'这个工具sql不能换行,后期再看看如何调整参数

sqlserver的语法和mysql有很大的不一样,执行sql需要

GO一下才能执行