本文主要包括:

Dinky使用笔记

编译

因为公司集群是以CDH6.2.0为基础的,防止后期有问题,这里就不直接使用dinky官方的安装包,编译的时候指定hadoop版本

git clone https://github.com/DataLinkDC/dinky.git

git checkout v1.2.4

mvn clean package -DskipTests=true -Dspotless.check.skip=true -Dhadoop.version=3.0.0-cdh6.2.0 -P prod,flink-single-version,flink-1.17 编译的时候要用jdk1.8来,报错Failed to execute goal com.diffplug.spotless:spotless-maven-plugin:2.27.1:

解决方案: 编译的时候添加-Dspotless.check.skip=true

注意: dinky的guava版本和cdh集群内的guava版本不一致,需要把dinky的源码的guava版本改成<guava.version>11.0.2</guava.version>

安装

编译后的安装包再build下,我们把build/dinky-release-1.17-1.2.4.tar.gz 上传到服务器

- 创建数据库

mysql -uroot -p #创建数据库 mysql> create database dinky; #授权 mysql> grant all privileges on dinky.* to 'dinky'@'%' identified by 'dinky' with grant option; mysql> flush privileges; - 解压并做相关配置

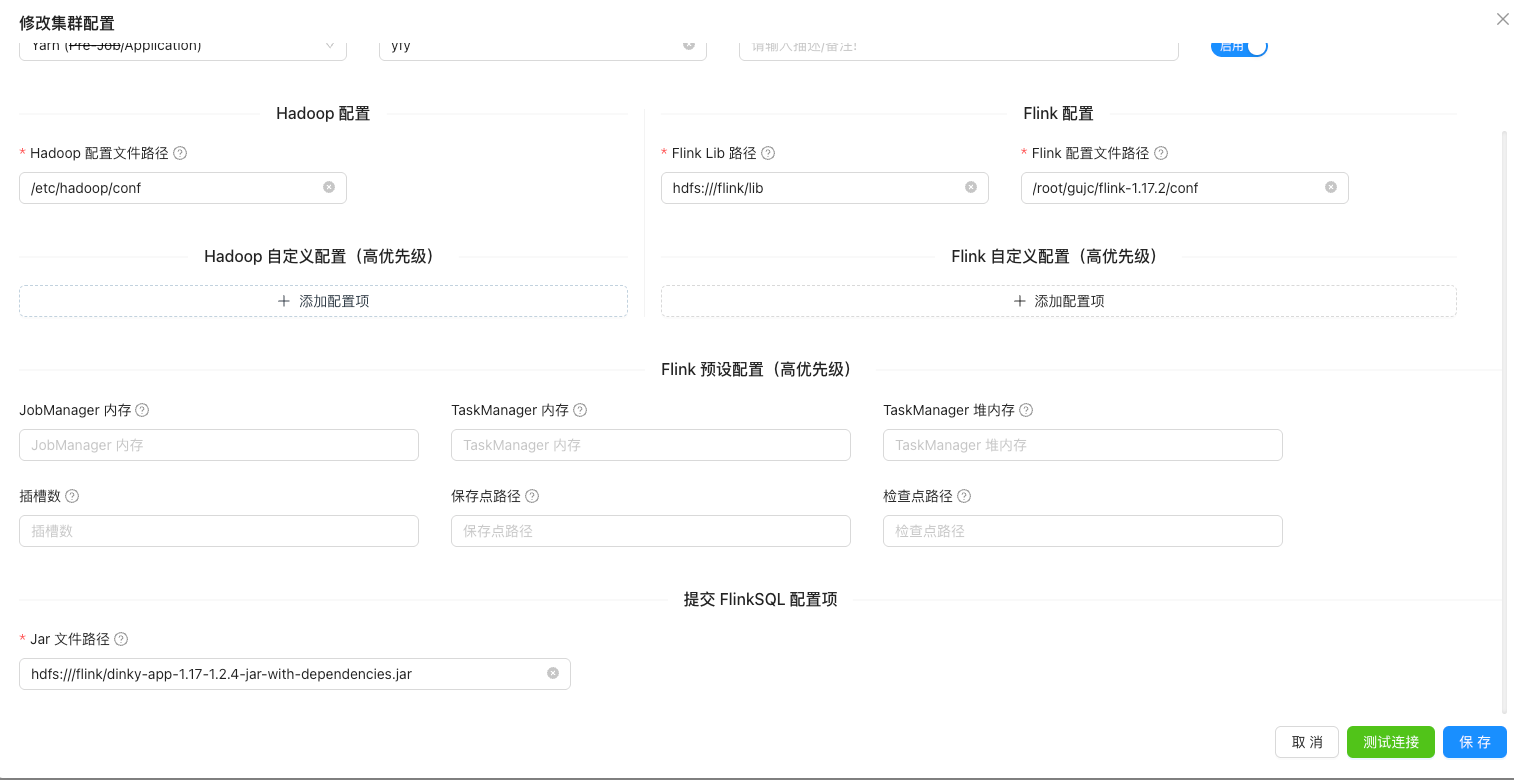

## 解压 tar zxvf dinky-release-1.17-1.2.4.tar.gz -C . ## 在数据库创建dinky数据库以及创建dinky用户名密码 ## 修改application.yml和application-mysql.yml ## 准备flink lib下相关包 cp /opt/soft/flink-1.17.2/lib/* dinky/extends/flink1.17 ## 并把flink lib下的包都上传到hdfs /flink/lib 下 hadoop fs -put /opt/soft/flink-1.17.2/lib/* /flink/lib cp dinky/dinky-client/dinky-client-hadoop/target/dinky-client-hadoop-1.2.4.jar dinky/lib ## 启动dinky bin/auto.sh start 1.17 ## 停止dinky bin/auto.sh stop - 在dinky中注册集群

后面就是具体的使用了

问题

Caused by: java.lang.NoClassDefFoundError: org/apache/hadoop/yarn/conf/YarnConfigurationCaused by: java.lang.NoClassDefFoundError: org/apache/hadoop/yarn/conf/YarnConfiguration at org.dinky.gateway.yarn.YarnGateway.initYarnClient(YarnGateway.java:175) ~[dinky-gateway-1.2.4.jar:?] at org.dinky.gateway.yarn.YarnGateway.test(YarnGateway.java:280) ~[dinky-gateway-1.2.4.jar:?]

解决方案: 把dinky-client-hadoop.jar放到lib下即可

2. 编译的时候要用jdk1.8来,报错Failed to execute goal com.diffplug.spotless:spotless-maven-plugin:2.27.1:

解决方案: 编译的时候添加-Dspotless.check.skip=true

3. Caused by: java.lang.VerifyError: (class: com/google/common/collect/Interners, method: newWeakInterner signature: ()Lcom/google/common/collect/Interner;) Incompatible argument to function1

Caused by: java.lang.VerifyError: (class: com/google/common/collect/Interners, method: newWeakInterner signature: ()Lcom/google/common/collect/Interner;) Incompatible argument to function

at org.apache.hadoop.util.StringInterner.<clinit>(StringInterner.java:40) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration$Parser.handleEndElement(Configuration.java:3113) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3184) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:2982) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2876) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2838) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2715) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1186) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]

at org.apache.hadoop.conf.Configuration.getTrimmed(Configuration.java:1240) ~[hadoop-common-3.0.0-cdh6.2.0.jar:?]提交任务到yarn的时候,报错,原因是dinky使用的guava包和cdh的guava包版本不一致,把dinky的源码的guava版本改成<guava.version>11.0.2</guava.version>重新编译后,

替换dinky-app-1.17-1.2.4-jar-with-dependencies.jar即可